Shared Dictionary Compression for HTTP at LinkedIn.

March 4, 2015

HTTP protocol has been the glue that holds together the Web, mobile apps and servers. In the world of constant innovation, HTTP/1.1 appeared to be the only safe island of consistency until the announcement of HTTP/2. Yet, even with HTTP being as robust and efficient as it is, there is still room for improvement, and this is what this post is about. LinkedIn’s Traffic Infrastructure team is making LinkedIn faster by exploring ways in which HTTP can be improved.

Traditionally, HTTP communications are compressed using either gzip or deflate algorithms. Of the two, gzip strikes the balance of being aggressive enough to reach a good compression ratio, while not having ridiculous CPU requirements. Algorithms such as Burrows-Wheeler transform (popularized through bzip2) offer higher degrees of compression, but have higher CPU requirements. Until recently it was generally accepted that gzip is the best way to compress HTTP traffic. Yet there is an alternative to using computationally intense compression algorithms to transfer less data over the wires - this alternative is to start with sending less data in the first place.

Enter SDCH

SDCH (pronounced “Sandwich”) is a an HTTP/1.1-compatible extension, which reduces the required bandwidth through the use of a dictionary shared between the client and the server. It was proposed by four Google employees over five years ago. It utilizes a relatively simple mechanism that has the potential to drastically reduce transmission sizes. Currently Google Chrome and other browsers based on the Chromium open-source browser project support SDCH, but until recently Google was the only Internet site to support SDCH on the server. In this post, we will be sharing an overview of how it works, our experience implementing it and perhaps shed some light on why its use isn’t very widespread despite its remarkable potential.

SDCH is very simple at first glance. Instead of compressing every page as it was independent document, it takes into consideration that many sites have many common phrases occurring across multiple pages - navigation bars, copyrights, company names, etc. In addition to phrases, modern pages share a good deal of CSS files, JavaScript code, etc. Once those common elements have been downloaded, there is no need to transfer them over and over again. Instead, the common elements are enumerated in a dictionary. If the browser and the server have same revision of the dictionary, then the server can start transferring only short keys, instead of long values. The browser will use the dictionary to substitute keys for phrases.

LinkedIn is committed to privacy of its users, and therefore we rely on browsers advertising support for SDCH for HTTPS schemas. Chromium does that, other browsers will follow the suit.Negotiations

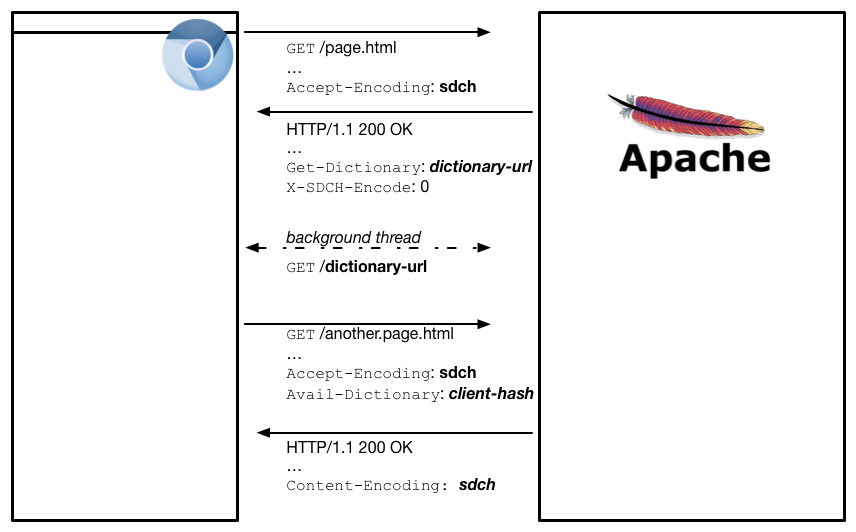

For SDCH to work, several conditions have to be met. First, both the browser and the server must support SDCH. Second, both the browser and the server must share same revision of the dictionary - using two different dictionaries will result in broken pages due to missing style sheets, invalid JavaScript or unfinished sentences.These conditions are negotiated through HTTP headers as the browser makes a GET request. In plain English, the negotiation can be described as following:

browser: Hi! I need to GET /page.html. And BTW, I support SDCH

server: Hi! Here is the page. And BTW, here’s URL to get SDCH dictionary!

… (browser downloads dictionary in the background) …browser: Hi I need to GET /another.page.html. And BTW I support SDCH -here is my client-hash (see below)

server: Hi! Here is the page! And BTW, since your dictionary is up to date, the page is SDCH encoded!

In both phases, the client advertises SDCH support with the Accept-Encoding: sdch header. If the client has a dictionary, it advertises that with the Avail-Dictionary: client-hash header. We will talk about the hash generation later.

Once the browser is done fetching the dictionary, it is expected to advertise the availability of this dictionary by including the Avail-Dictionary header with the next request. This header contains a unique identifier for the provided dictionary called “client hash”. At this point the server can start sending SDCH-compressed traffic (marked with the Content-Encoding: sdch header). The SDCH-compressed payload is prefixed by “server-hash”. The two hashes combined form the SHA256 digest of the dictionary, which allows the browser and the server to agree that they use the same dictionary.

From this point on the server can start SDCH compression: it substitutes the long common strings included in the dictionary with shorter representations, includes sdch in the Content-Encoding header and prepends the body with the server hash.

Client receives the response and decodes the content by doing what the server did in reverse.

Making Sandwiches

SDCH is very simple at first glance, it makes one wonder why it isn’t more popular. After all, all you need to do is to generate a dictionary of long common strings found in your static content and have a web server that supports SDCH. How hard can that be? Let’s find out:

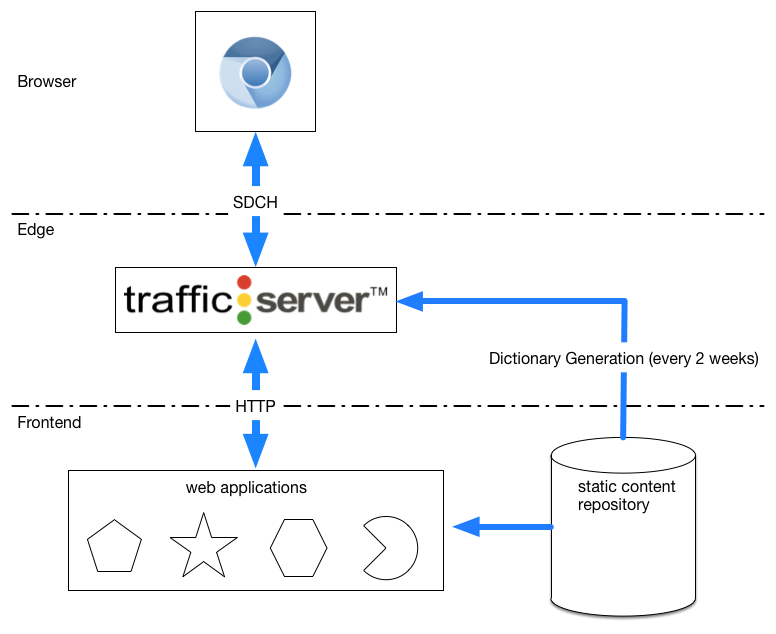

First, you need to generate a dictionary of long common strings from your static content. The open-source solution Femtozip by Garrick Toubassi seems to be the only available solution at this point. We have run into a performance issue that we fixed and we contributed the patch back. Running SDCH in production requires generating the dictionary fairly often, ideally every time we deploy a front-end application. That comes with a price of extra deployment time, about 7 hours for our production dataset. At LinkedIn we are deploying up to 3 times a day, so we opted for “near-line” dictionary generation - we generate the dictionary in the background every two weeks. At this cadence we can benefit from SDCH compression without paying the overhead at every deployment.

The second challenge is presented by versioning of static content. Every company has a different process for versioning and building their static content and this step may be challenging for some as dictionary generation may take a very long time. For websites that have a large collection of static content, generation of the dictionary can take days. A map-reduce approach can potentially speed up dictionary generation, but this is beyond the scope of this post. Let’s assume, for the sake of simplicity, that you didn’t have that many files and haven’t had any issues with this; now you have a dictionary for your static content and you are serving it from your main site under the “/my_dictionary” partial url.

The third thing you need is a web server that supports SDCH. You are out of luck on this one as there are currently only a few publicly available solutions for serving SDCH-encoded content. You will need to code your own module/plugin/webserver for this purpose. Since we extensively use Apache Traffic Server at LinkedIn, our experiment involves a custom Traffic Server plugin that uses atscppapi and open-vcdiff libraries. We are in the process of open-sourcing the plugin.

Challenges

Femtozip outputs a dictionary that can be used for SDCH with minor modifications. You need to prepend it with the SDCH dictionary headers so that the browser knows on which domain this dictionary can be used and under which paths is this dictionary valid. We discovered that Chrome aggressively blacklists domains and does not tell you why your domain is blacklisted. This turned debugging to an arduous task. Fortunately, the Yandex Browser has a tab under their net-internals page that gives you the error codes that you can conveniently lookup by checking out sdch_manager.h under the Chromium source-code.What we discovered is that if the browser advertises its dictionary using the Avail-Dictionary header, the server is expected to encode the response body with SDCH no matter what. Even if the response doesn’t contain sdch under the Content-Encoding header, the browser will still try to decode the received body, fail and blacklist your domain from further SDCH use until the browser restarts. If the server doesn’t want to encode with SDCH and if the client has advertised an available dictionary, the server has to include the X-Sdch-Encode: 0 header in the response to explicitly indicate that the response body is not SDCH-encoded.