Main takeaways

- Make some sort of plan for managing your EC2 costs that's proportionate to how important the cost savings are to your business.

- EC2 Spot is good enough to serve most customer facing workloads.

Move all autoscaling workloads to EC2 spot as by definition these are semi-ephemeral and suitable for host churn.

A mix of purchasing both Reserved Instances and AWS Savings Plans can be very efficient and flexible enough to deal with both increases and decreases in spend.

Use a small number of levers to optimise day to day spend.

I work at Intercom, a mature B2B SaaS startup, and I've worked on managing our AWS costs in some way since I joined back in 2014. Intercom is all-in on AWS as our cloud provider. Intercom's primary AWS account was created in July 2011, spending a gigantic $19.00 on an RDS MySQL cluster. Our AWS bill has grown a bit since then, along with our business.

Do what matters

We have a cost management program at Intercom, though it is nobody's full-time job. The costs program is managed by a Technical Program Manager, along with other programs such as availability. I provide technical strategy, domain expertise and context from being a relative old-timer at Intercom. In scope for the costs program is all parts of the hosting component of our Cost of Goods Sold (COGS), an important business metric for SaaS business, and the hosting component is predictably dominated by our AWS bill. We endeavour to make the costs program at Intercom proportionate to the importance of its impact on our business. Of course, money is important to any business, but we try to balance our cost management activities in proportion to the impact on our business. Like most startups, the reality is Intercom will not succeed or fail because of our AWS bill. A wildly successful business is typically not predicated on how efficient their infrastructure spend is - delivering value to customers and successfully monetizing that value is. However, a predictable COGS margin can be important for the valuation of a business, and cashflow can also be important from time to time. What matters most is that we have a plan in place, with a strategy and approach to cost management that is bought into by our engineering and finance leadership teams. With all that said, here are some juicy details about how we manage our EC2 bill.

Why we care so much about EC2

EC2 instances make up around one third of Intercom's AWS bill, and that's after considerable optimization. We primarily use EC2 instances to serve our Ruby on Rails monolith application, which at any moment in time uses well over a thousand EC2 instances, ranging from m5.large to r5.24xlarge hosts, spread over 300+ autoscaling groups. We don't really use Kubernetes, ECS or other container based systems, as (fortunately?) we got good at deploying buildpack managed applications using EC2 autoscaling groups before these systems existed. We also manage a number of Elasticsearch clusters using a mix of OpsWorks and custom automation using Systems Manager, also built on top of EC2 instances. We use Elasticsearch like this for a variety of reasons - when we started using Elasticsearch we had a number of other applications managed using OpsWorks, third party hosting solutions were either non-existent or immature, and we have benefited from having complete control over the Elasticsearch clusters. If you're interested in this architecture, my colleague Andrej Blagojevic gave a great talk about it here:

YouTube:

Intercom's use of EC2

With 300+ autoscaling groups and a few large Elasticsearch clusters, we have a large variety of usage patterns to manage and optimise. Our Elasticsearch clusters tend to grow slowly, with occasional bursts when upgrades and reimports are in flight. These hosts need to live for a long time and the fleets are manually scaled. Host churn here can easily cause clusters to lose data, so stability is paramount. Most of the customer facing Intercom monolith run asynchronous worker jobs. Some of these are quasi-real time, with customer facing impact if the workers can't process jobs as they are created. Many others are web servers, directly processing customer requests from browsers, mobile phones or API clients. And then there's a long tail of other workers running asynchronous jobs that can be processed eventually, such as sending daily summary emails to our customers about their usage of Intercom. There's also a long-tail of other applications running on EC2, some of them customer facing like our websocket process service, and a bunch of internal facing services like IT servers, Airflow.

Our Ruby on Rails monolith has the largest footprint, and it works well on anything larger than an m5.large. We dynamically size the number of processes running depending on the number of CPUs on a given host, so we can easily drop in a host of any size into any worker or web fleet. The workloads can be very variable, with a lot of autoscaling happening in response to customer demand. Our product team keep building new features which often require new infrastructure, and we're constantly doing things like backfills and upgrades that can require a lot of infrastructure. For the first few years of Intercom we deployed the monolith to on-demand EC2 hosts and spent a lot of time managing EC2 reservations for this to be cost effective.

A few years ago we had a particularly great AWS account manager. He used to tell us things all the time like "you need to sign an EDP" and "you need to use more spot instances". Eventually, we did these things, and it turned out that he was totally correct. We started to use large amounts of spot instances for various asynchronous jobs. We got used to the limitations and built out tooling and monitoring to run these hosts well in our environment. The capabilities around managing EC2 spot workloads improved over time, and we got increasingly confident in our use of EC2 spot as we were able to take advantage of using multiple instance types for any particular workload. Spot started to become a satisfyingly substantial part of our EC2 bill.

Around the time we were able to use EC2 Autoscaling Groups with Mixed Instances, we started to experiment using them in some of our directly customer facing APIs and webservers. We made a few tweaks to our healthchecks, tweaked various autoscaling settings and made sure we were running a diverse range of hosts in multiple availability zones.

Over the course of 2020 we moved all asynchronous workers and ultimately the vast majority of our servers running our customer-facing Ruby on Rails monolith over to spot. We left a portion of hosts on-demand to ensure baseline stability on particularly sensitive workloads such as our web application, but all auto-scaling capacity for those workloads also got moved to spot. We aim for 99.9% uptime of our application. In practice, we find that spot hosts are available when we need them, and our uptime has not been threatened by spot host churn. We're not alone in this - many others such as Basecamp have also reported being able to run customer facing workloads on spot.

Thanks to our large Elasticsearch fleet and historically efficient cost management, we've got a large amount of 3 year convertible reserved instances that needs to be managed alongside our spot fleets. The launch of Savings Plans was undoubtedly a big win for us due to them automatically applying to all uses of EC2, just as other features such as convertible, region and instance family flexibility for reservations had also made our cost management program more efficient and optimised.

However it wasn't obvious how we would manage both our existing EC2 reservations, which were already reasonably flexible due to being convertible and our AWS usage was almost entirely in one region, alongside Savings Plans. I also released that Savings Plans were missing a feature of EC2 reservations that we had taken advantage of from time to time. EC2 Reservations could be joined up with other reservations, with the total value of the reservations being applied to the latest of the reservations being combined. This meant that if our EC2 usage was reduced, as did happen from time to time, we could "stretch" some reservations to apply over a longer period of time and ensure zero wastage. Our monthly bill wouldn't necessarily drop, but the reservations would be applied and amortised over a longer period of time, and so our overall expense would drop. We couldn't do this with Savings Plans though, so we decided on a hybrid strategy of maintaining an amount of EC2 reservations that could be stretched when our EC2 usage dropped and Savings Plans which would efficiently mop up all sorts of compute usage.

In practice, this means we do the following:

- Move all autoscaling workloads to spot.

- Ensure 100% utilisation of all EC2 reservations.

- Aim for "good" utilisation of Savings Plans. This means we're aiming for >90% utilisation. 100% Savings Plans utilisation means we're spending money on demand all the time, which we try to avoid, so it aiming to have a bit of buffer results in the most efficient spend for us.

- Understand our Savings Plans usage. Trends can easily be seen by looking at where Savings Plans are being applied on an hourly basis. As we avoid autoscaling using on-demand hosts, looking at where Savings Plans are being applied and looking for variable utilisation can be high leverage work at ensuring high efficiency of our environment.

If there is constant on-demand use, we first move some hosts from on-demand to spot. I call this our "pressure valve". For one of our larger workloads I keep a variable amount of hosts that can be trivially moved between on-demand and spot. This provides day-to-day/week-to-week cover, making it easy to respond to relatively small or temporary changes in EC2 use. If this on-demand use becomes persistent, then I'll purchase some additional Savings Plans and move the variable use workload back to on-demand.

If there is constant underutilisation of Savings Plans and the pressure valve has been exhausted, I'll reduce the baseline of on-demand use covered by EC2 reservations by stretching one or more reservations, and move a portion of the workload back to

We also do all the regular cost optimisation stuff - we also go looking for rightsizing opportunities, forgotten-about test-servers, etc. Autoscaling thresholds are also kept under regular review, especially for our larger workloads.

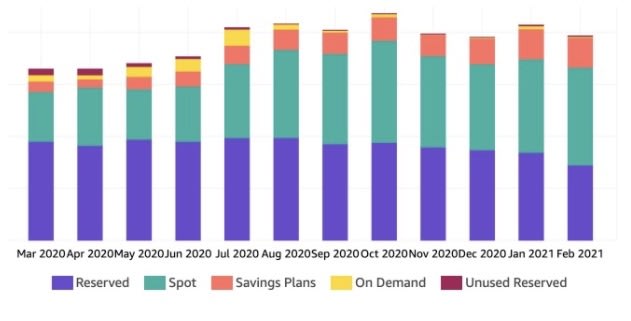

What does this look like in practice?

We've been running this process over the last 6 months and despite our business and infrastructure growing we've managed to keep our EC2 spend under control, and running more efficiently than ever. Here is the breakdown of our spend on EC2 instances over the last 6 months:

| Purchase Option | Percentage of EC2 Instance spend |

|---|---|

| Spot | 44.33% |

| Reservations | 42.77% |

| Savings Plans | 11.96% |

| On Demand | 0.78% |

| Unused Reserved | 0.01% |

The work involved in maintaining this setup is to check in once every week or two, look at a few dashboards in Cost Explorer and make changes as needed. The decision-tree here is pretty well defined, so the work involved is pretty straightforward and quick. We plan to automate some of this this work soon.

Update: this mechanism not only works pretty well, but was also independently discovered and implemented by ProsperOps. Also their solution is entirely automated, as opposed to my largely manual efforts here. I can recommend taking a look at their product!

Spot thoughts

Intercom is almost entirely in us-east-1, AWS's largest region, which helps with spot host availability. We exclusively use 5th generation hosts, and in practically all of our autoscaling groups we list all eligible host types for the size of host we want to run a particular workload on, i.e. every combination of n, d, a in each instance family. Here's a screenshot from the autoscaling group of our largest customer facing workload:

I've recently been experimenting with turning off AZRebalancing for some workloads, as it seems to cause unnecessary host churn without obviously improving our availability. Stability is generally more important to us than being perfectly balanced across availability zones, as hosts going in and out of service regularly reduces capacity, whereas large scale availability events where we need hosts in multiple availability zones to stay up are uncommon.

I am bullish on EC2 spot being suitable for customer facing workloads. AWS is making increasingly loud noises about this and promoting its use. In theory and practice, spot hosts can and do regularly go away. However, with a configuration that uses a diverse range of hosts across a diverse range of availability zones, I think that the actual availability of a fleet of spot hosts comes pretty close to on-demand.

Back when I worked at AWS, I was at one of the (in)famous Wednesday Ops meetings, and some poor manager was presenting the operational metrics of their service. One of the latency metrics had an obvious but stable increase after a deployment. The manager explained what happened - some feature had gone out that had resulted in a latency change, but that was ok because it was inside their documented latencies. My memory is pretty hazy by now and maybe I am getting some details wrong, but I am pretty sure it was Charlie Bell who interjected here, and I'm going to paraphrase what he said: "You don't get to do this. Your operational performance is your effective SLA, and you don't get to unilaterally change it. Customers rely on your actual operational performance and you can't get away with changing your latencies while referencing your documentation that nobody reads". I think this is how EC2 spot can generally be thought of these days - yes, individual hosts can be taken away, and yes there'll be shortages of some hosts in some AZs, but the overall operational performance of EC2 Spot will be consistently reliable and worthy of building your business on top of, as actual operational performance is what AWS obsesses about.

Summary

Your milage may vary, but for Intercom a mix of Reserved Instances, Savings Plans and EC2 spot provides an effective, cost-efficient EC2 platform to reliably build our business on.

Top comments (0)